Preface

Ridgeback User Guide

Copyright ©2025 Ridgeback Network Defense, Inc.

Welcome to the Ridgeback User Manual. Ridgeback is designed to bring order to the inherent chaos of modern networks, empowering organizations to manage and secure their IT, OT, and IoT environments effectively. This manual will guide you through the powerful capabilities of Ridgeback, enabling you to take full control of your network, reduce cyber risk, and ensure compliance with security best practices.

As networks become more complex, the need for more visibility and proactive defense mechanisms has never been greater. Whether you're an IT manager, sysadmin, security professional, or network engineer, Ridgeback equips you with the tools to identify unauthorized devices, disrupt network attackers, and provide fact-based risk reporting in real time.

This guide will walk you through Ridgeback's easy deployment process and its wide array of features, from real-time attack disruption to enhanced network segmentation validation. It is designed to serve both technical experts and decision-makers, making complex network insights accessible and actionable.

With Ridgeback, you are not just defending your network — you are gaining clarity, control, and confidence in your ability to respond to threats. Let this manual serve as your roadmap to maximizing the value Ridgeback can bring to your organization.

Why do I need another security tool if I'm doing fine?

Ridgeback helps you remove the chaos from your IT systems. Unlike other tools that add complexity and extra work, Ridgeback is designed to make your job easier, whether you're in networking, sysadmin, security, or management. It helps you work smarter, faster, and more efficiently.

Introduction and Overview

In a world where networks are becoming more intricate and the stakes for cybersecurity have never been higher, chaos reigns. IT managers, security professionals, network engineers, and sysadmins often find themselves staring at a digital landscape rife with uncertainty, their responsibilities fragmented across a field where one overlooked vulnerability can snowball into catastrophic consequences. Enter Ridgeback—a steadfast guardian in the turbulent realm of network security.

Imagine an IT manager named Alex. Each morning, he walks into his office, knowing that beyond the calm surface of monitors and blinking servers lies an ecosystem teeming with unseen risks. Rogue devices, unauthorized communications, and probing enemy scanners wait in the digital shadows, testing his defenses. With every beep of an alert, the question looms: Is today the day chaos breaks loose?

Ridgeback changes this story. For Alex, it becomes more than just a tool—it’s a sentinel. From the moment Ridgeback is integrated, the once invisible elements of the network spring into stark relief. Devices, be they IT, OT, or IoT, are visualized in real-time, giving Alex complete situational awareness 24/7. He no longer just reacts; he anticipates. The chaos that once strained his resources transforms into order, exposing vulnerabilities and allowing him to direct his team’s efforts where they matter most.

The benefits of Ridgeback aren’t limited to tech experts alone. Financial officers and board members gain access to fact-based risk reporting, empowering them to grasp complex security metrics in plain terms. The Ridgeback Risk Index demystifies the frequency and severity of potential threats, translating complex data into intuitive scores that inform strategy and spending. No longer is there a chasm between technical teams and decision-makers; Ridgeback’s insights bridge it with clarity.

And when attacks do strike, as they inevitably will, Ridgeback’s real-time attack disruption springs into action. Its virtual defenses activate like millions of hidden guardians, neutralizing intruders and disrupting malicious activity before damage spreads. Attackers find themselves not only stopped but discouraged, knowing Ridgeback makes future attempts fruitless.

For every network engineer confirming firewall efficacy or sysadmin combing for rogue hostname queries, Ridgeback is an ally. It tracks devices, uncovers unauthorized communications, and identifies machines communicating with non-existent services—all to ensure that defenders stay one step ahead.

Ridgeback doesn’t just guard networks; it fosters confidence. IT and security teams move from reactive to proactive, with newfound time and assurance to plan, innovate, and collaborate effectively with leaders. And as chaos subsides into order, the entire organization can breathe easier, knowing its infrastructure is fortified by a system that turns complex security management into a streamlined, resilient process.

In the end, Ridgeback isn’t just a tool. It’s a partner that stands vigilant, making real-time security insights simple, clear, and actionable—proving itself essential not just for today’s risks but for whatever challenges the future may bring.

Ridgeback Architecture

The Superadmin, Admin, and User

A superadmin account controls everything, an admin account controls an organization, and a user uses Ridgeback.

An Rcore Covers a Network Segment

You only need one Rcore per network segment, or layer 2 broadcast domain. You can add multiple Rcores per segment for special coverage, but be sure there is no more than one Rcore per segment that is injecting traffic. Otherwise, the dogs will get into a fight.

Service Containers Run Inside Docker

You pull an image from a container registry. Then you turn that image into a running service container.

Services Provide Services

The primary service containers are:

- analytics: The analytics service generates useful metrics.

- enrichment: The enrichment service provices "enrichment" data to fuse with your data.

- manager: Rcores communicate with the manager service.

- policy: The policy service manages automation.

- server: This is what the web client connects to.

- surface: The surface service maps out the attack surface.

Physical or Virtual

You can run an Rcore on physical or virtual computers.

Deployment Models

These are just a few deployment models to get started. These might be considered the most basic deployment models. More sophisticated deployment models are covered in advanced documention.

A Road Warrior with a Laptop

The most popular deployment model to get started is to place both the service containers and an Rcore on a single wireless laptop. This allows the user to take Ridgeback on the road and attach to any wireless network in an instant.

More Fun than a Barrel of Laptops

Another popular deployment model is to have the service containers on one wireless laptop, and an Rcore on a different wireless laptop.

The Monolith

For small organizations that want to keep a Ridgeback server in the closet, putting the service containers and an Rcore on a single computer is an option.

Enterprise-Ready

Ready to scale up to the big time? Put the service containers on a server, and then put Rcores on other computers. Any computer running an Rcore, anywhere in the enterprise, can stream its data to the computer with the service containers. (The computer running the service containers can actually be anywhere, even in the cloud.)

Data Collection and Storage Requirements

Ridgeback is designed to analyze network traffic metadata, ensuring user privacy by not examining or storing the actual content of network traffic. This approach allows organizations to gain insights into network behavior while maintaining data security and confidentiality.

Data Volume Per Endpoint

Ridgeback collects approximately 200 bytes of metadata per network event. The volume of collected data can be summarized as follows:

- Average Collection Rate: ~10 network events per endpoint per minute

- Daily Data Volume: ~14,400 network events per endpoint, totaling ~2.88MB per day

- Monthly Data Volume: ~86.4MB per endpoint

Data Volume for a Subnet (/24)

For a fully loaded /24 subnet (nominally 254 endpoints), the data storage requirements scale as follows:

- Monthly Data Estimate: ~22.118GB

- Conservative Storage Requirement: 25GB per /24 subnet, per month

These figures provide a conservative estimate for organizations planning data storage and infrastructure capacity to support Ridgeback's network traffic metadata analysis.

System Requirements

Ridgeback deploys in three parts:

-

The first part of deployment is a MySQL-compatible database. The database can be a standalone installation or the database can be run in a container if long-term data retention is not a requirement. The database gives you the ability to look back in time and perform forensics.

-

The second part of deployment is a collection of containers. The containers run various Ridgeback services. The containers make Ridgeback a portable and highly extensible solution.

-

The third part of deployment is one Rcore per network segment that you wish to protect with Ridgeback. An Rcore is a small executable that handles reading data from and writing data to a network segment. The Rcore allows Ridgeback to work in most environments, including environments with significant operational technology (OT) and environments with significant personal devices, such as cell phones and tablets.

System Requirements for a Database

Ridgeback requires a MySQL-compatible database. A standard Ridgeback installation provides the option of loading a MariaDB database into a container for short-term data storage. The database-in-a-container option is not suitable when long-term data retention is a requirement.

Some other (external) database options are listed below.

- MySQL Standard Edition:

https://www.mysql.com/products/standard/ - MySQL Enterprise Edition:

https://www.mysql.com/products/enterprise/ - MariaDB:

https://mariadb.com/kb/en/getting-installing-and-upgrading-mariadb/ - Azure Database for MySQL:

https://azure.microsoft.com/en-us/products/mysql/ - Amazon RDS for MySQL:

https://aws.amazon.com/rds/mysql/ - MySQL (version 5.7 for Windows, macOS, Linux, and Solaris):

https://dev.mysql.com/doc/mysql-installation-excerpt/5.7/en/

The database will accumulate data about your IT infrastructure. You should implement backup and data pruning procedures according to the data retention policies at your organization.

System Requirements for Container Services

Ridgeback services run as containers. Before installing the Ridgeback services, you need to have a computer set up to run containers. Some options for a container runtime are listed below.

- Docker Desktop on Windows:

https://docs.docker.com/desktop/install/windows-install/ - Docker Desktop on Mac:

https://docs.docker.com/desktop/install/mac-install/ - Docker Desktop on Linux:

https://docs.docker.com/desktop/install/linux-install/ - Linux Containers (LXC):

https://linuxcontainers.org/lxc/getting-started/ - Mirantis Container Runtime:

https://www.mirantis.com/blog/getting-started-with-mirantis-container-runtime-on-windows-server/

The images for the Ridgeback services are less than 3.5GB in size (combined) and use Linux on the inside. (This is why Docker on Windows needs WSL installed.)

The containers will generate application logs. You should configure your container runtime environment to retain and purge logs according to the log retention policies at your organization.

The computer hosting the containers should have an IP route to the Ridgeback license server, which is license.ridgebacknet.com.

System Requirements for an Rcore Computer

Ridgeback uses a single Rcore per network segment. An Rcore is a small executable that handles reading data from and writing data to a network segment. The minimum system requirements for an Rcore version 3.x are listed below.

Windows Requirements (for an Rcore)

- Windows 11 64-bit: Home or Pro version 21H2 or higher, or Enterprise or Education version 21H2 or higher.

- Windows 10 64-bit: Home or Pro 21H1 (build 19043) or higher, or Enterprise or Education 20H2 (build 19042) or higher.

- A 64-bit processor with at least 2 cores.

- At least 4GB system memory.

- The latest version of the Npcap driver must be installed prior to installing the Rcore.

https://npcap.com/#download

Mac Requirements (for an Rcore)

- macOS version 14.6 (Sonoma) or higher.

- Intel or ARM (M1, M2, M3, M4) CPU.

- At least 4GB system memory.

Linux Requirements (for an Rcore)

- Debian, Ubuntu, or other free distribution. (Ridgeback does not support CentOS.)

- Intel or ARM CPU. (Ridgeback should work on a Raspberry Pi 4 or 5.)

- At least 4GB system memory.

Every computer running an Rcore needs an IP route to the computer hosting the Ridgeback services. An Rcore will only operate if the computer that hosts it is turned on, awake, and connected to the network.

Common Use Cases

This section covers fundamental use cases that help users leverage Ridgeback effectively. Each of these use cases not only optimizes network reliability but also bolsters security, forming a comprehensive approach to network health and integrity.

Each of these use cases supports both the reliability and security of a network by addressing potential risks, improving visibility, and reinforcing policy compliance. By implementing Ridgeback in these scenarios, organizations can enhance their ability to detect and mitigate threats while maintaining an efficient, well-regulated network environment.

Ridgeback is a versatile tool with numerous applications tailored to fit the unique needs of any network setup. While the following common use cases provide an excellent starting point, Ridgeback’s adaptability allows it to address a virtually limitless range of scenarios based on your network’s architecture and specific requirements.

Reconcile or Audit DNS Entries

Why it matters: Regularly reconciling and auditing DNS entries is crucial for identifying discrepancies between expected and actual DNS configurations. Misconfigured or outdated DNS entries can lead to traffic misdirection, system failures, or potential exposure to attackers exploiting subdomain takeovers. Ridgeback's metadata analysis helps verify that DNS activity aligns with approved entries, ensuring that network resources are correctly routed and protected from exploitation.

Eliminate Insecure Hostname Queries (e.g., LLMNR or mDNS Requests)

Why it matters: Hostname resolution protocols like Link-Local Multicast Name Resolution (LLMNR) and multicast DNS (mDNS) can be leveraged by attackers to perform man-in-the-middle attacks or gather network intelligence. These insecure queries often occur unintentionally and can lead to potential vulnerabilities. By detecting and eliminating such queries, Ridgeback reduces attack vectors, ensuring a more secure and streamlined network resolution process.

Eliminate Reconnaissance Threats (Basic Endpoint Enumeration)

Why it matters: Network reconnaissance is a common initial phase in cyber-attacks, where attackers map out endpoints and network architecture to identify targets. By analyzing network traffic for signs of unauthorized enumeration activities, Ridgeback can help prevent attackers from gaining critical insight into the network's structure. This enhances security by disrupting the early stages of an attack, making it significantly harder for potential intruders to plan their strategies.

Eliminate Active Threats (Attempts to Exchange Data with Unused Endpoints)

Why it matters: Detecting communication attempts directed at unused or unallocated IP addresses can indicate the presence of active probing or unauthorized data exchange attempts. Such attempts can be exploited by attackers to uncover vulnerabilities within the network. These endpoints, if improperly configured or overlooked, may serve as footholds for exploitation, allowing attackers to initiate malicious activity or breach network defenses. Ridgeback helps identify and block these activities, ensuring that suspicious traffic is flagged and mitigated promptly. This not only protects against potential data exfiltration but also highlights areas where network policy enforcement may need to be strengthened. By addressing these vulnerabilities, organizations can reinforce their security posture and reduce the risk of exploitation through unused network assets.

Eliminate Unused or Unapproved Services

Why it matters: Running unused or unapproved services on the network can expose vulnerabilities and provide attackers with entry points. Such services often go unnoticed and can be exploited if not properly managed. Ridgeback helps detect unexpected or unauthorized services, enabling network administrators to decommission them and maintain a streamlined, secure network environment. This also aids in reducing the attack surface and optimizing resource allocation.

Detect Data Leakage Between Network Segments

Why it matters: Data leakage between segmented network areas can undermine security strategies designed to compartmentalize and protect sensitive data. Ridgeback's ability to monitor network metadata helps identify unintentional or unauthorized data transfers between these segments, allowing for quick response to prevent potential breaches. Maintaining proper data flow segmentation ensures compliance with security policies and reduces the risk of sensitive data exposure.

Identify and Remove Unauthorized Devices

Why it matters: Unauthorized devices on a network can act as entry points for attackers or introduce vulnerabilities. Such devices can bypass traditional security measures and pose significant risks if they are compromised. Ridgeback aids in identifying these devices by analyzing network activity for unknown or rogue devices attempting to communicate within the network. Removing or quarantining these unauthorized devices strengthens the overall security posture and ensures only approved devices have access.

Audit DNS Entries

Auditing DNS entries is a fundamental practice for maintaining the security and reliability of any network. This chapter covers the essentials of authoritative nameservers, why they matter, how Ridgeback can help audit both forward and reverse DNS records, and guidance for setting up nameservers on Windows and Linux systems.

This chapter provides a foundation for auditing DNS entries with Ridgeback and configuring authoritative nameservers to support network reliability and security. This chapter is not meant to be a comprehensive treaty on DNS, but instead is something to get you started using DNS in your network.

Authoritative Nameservers

Definition: An authoritative nameserver holds the definitive records for a domain and responds to DNS queries with the most accurate information. Unlike caching or recursive nameservers that query other servers to resolve DNS requests, authoritative nameservers provide direct answers for domains they manage.

Types of Authoritative Nameservers:

- Primary (Master) Nameserver: The main server that holds the original zone records for a domain.

- Secondary (Slave) Nameserver: A backup server that obtains zone records from the primary server and can respond to queries if the primary server becomes unavailable.

Why Is an Authoritative Nameserver Important?

Authoritative nameservers play a critical role in DNS infrastructure:

- Reliability: They ensure DNS queries—both forward lookups (A/AAAA) and reverse lookups (PTR)—are answered promptly and accurately, supporting the network’s reliability.

- Security: Properly configured authoritative nameservers help prevent DNS-based attacks, such as cache poisoning, spoofing, and unauthorized reverse record tampering.

- Compliance: Auditing DNS entries, including reverse zones, can help maintain compliance with security standards and regulatory requirements.

- Redundancy: Secondary authoritative nameservers add a layer of resilience, ensuring domain availability even if the primary server fails.

Using Ridgeback to Audit DNS Records

Ridgeback offers powerful tools for auditing both forward and reverse DNS entries:

- Identify Discrepancies: Ensure A/AAAA and PTR records align—no orphaned forward entries without matching reverse, and vice versa.

- Detect Unauthorized Changes: Monitor for unexpected modifications to both forward and reverse zone files that could enable spoofing or mail-delivery issues.

- Enhance Visibility: Gain insights into DNS resolution patterns, including applications relying on reverse lookups (e.g., mail servers, logging systems).

- Strengthen Security Posture: Use metadata analysis to spot unusual reverse-DNS queries that may indicate reconnaissance or attack preparation.

How Ridgeback Works: Ridgeback collects and analyzes network traffic metadata—forward queries, reverse queries, and record changes—without storing actual packet contents. This allows administrators to validate that both forward and reverse DNS entries are functioning as intended and to spot anomalies efficiently.

Setting Up a Nameserver on Windows

Prerequisites:

- Windows Server installed.

- Access to Server Manager.

Steps:

-

Install the DNS Server Role

- Open Server Manager and navigate to Manage > Add Roles and Features.

- Select DNS Server and follow the installation prompts.

-

Configure the Forward Lookup Zone

- Open DNS Manager from Tools in Server Manager.

- Right-click Forward Lookup Zones and select New Zone.

- Choose Primary Zone, specify the domain name (e.g.,

example.com), and finish the wizard. - Right-click your new zone, choose New Host (A or AAAA) to add host records.

-

Configure the Reverse Lookup Zone

- In DNS Manager, right-click Reverse Lookup Zones and select New Zone.

- Choose Primary Zone, select IPv4 (or IPv6), and enter your network ID (e.g.,

192.168.1). - Specify the zone file name (e.g.,

1.168.192.in-addr.arpa) and finish the wizard. - Right-click the new reverse zone and select New Pointer (PTR). Enter the IP’s last octet and map it to the hostname.

-

Verify Configuration

- Open PowerShell or Command Prompt and run:

nslookup 192.168.1.1 - Confirm it returns the correct PTR record.

- Open PowerShell or Command Prompt and run:

Setting Up a Nameserver on Linux

Prerequisites:

- A Linux server with root or sudo access.

- Bind9 (or similar DNS server software) installed.

Steps:

-

Install Bind9

- Debian/Ubuntu:

sudo apt-get install bind9 - CentOS/RHEL:

sudo yum install bind

- Debian/Ubuntu:

-

Configure the DNS Server

-

Edit

/etc/bind/named.conf.localto add your forward and reverse zones:zone "example.com" { type master; file "/etc/bind/zones/example.com.zone"; }; zone "1.168.192.in-addr.arpa" { type master; file "/etc/bind/zones/1.168.192.in-addr.arpa.zone"; };

-

-

Create the Forward Zone File

-

Path:

/etc/bind/zones/example.com.zone -

Contents:

$TTL 86400 @ IN SOA ns1.example.com. admin.example.com. ( 2023110601 ; Serial 3600 ; Refresh 1800 ; Retry 604800 ; Expire 86400 ) ; Minimum TTL @ IN NS ns1.example.com. ns1 IN A 192.168.1.1 www IN A 192.168.1.10

-

-

Create the Reverse Zone File

-

Path:

/etc/bind/zones/1.168.192.in-addr.arpa.zone -

Contents:

$TTL 86400 @ IN SOA ns1.example.com. admin.example.com. ( 2023110601 ; Serial 3600 ; Refresh 1800 ; Retry 604800 ; Expire 86400 ) ; Minimum TTL IN NS ns1.example.com. 1 IN PTR ns1.example.com. 10 IN PTR www.example.com.

-

-

Restart and Verify

- Restart Bind9:

sudo systemctl restart bind9 - Test forward lookup:

dig @localhost example.com A - Test reverse lookup:

dig @localhost -x 192.168.1.1 PTR

- Restart Bind9:

With forward and reverse zones properly configured—and Ridgeback’s auditing capabilities—you’ll maintain accurate DNS mappings, improve network security, and ensure seamless hostname and IP resolution across your infrastructure.

Identify and Eliminate Insecure Hostname Queries

Hostname queries are an essential part of network communication, but not all methods for hostname resolution are secure. This chapter covers what insecure hostname queries are, how adversaries can exploit them, and steps for blocking these vulnerabilities to safeguard your network.

What Is an Insecure Hostname Query?

Definition: Insecure hostname queries are resolution methods that can be exploited by attackers due to their broadcast nature or lack of strong security measures. Two common types of insecure hostname queries are Link-Local Multicast Name Resolution (LLMNR) and Multicast DNS (mDNS):

- LLMNR: A protocol used to resolve hostnames when DNS is not available. It operates on a local network by broadcasting hostname requests.

- mDNS: Similar to LLMNR but primarily used in home or small office networks. It resolves hostnames by multicasting queries to other devices on the same local network.

Why These Protocols Are Considered Insecure:

- Lack of Authentication: LLMNR and mDNS do not have strong built-in authentication, allowing attackers to respond to hostname queries with malicious IP addresses.

- Broadcast Communication: They broadcast requests to all devices on the local network, which can easily be intercepted or spoofed.

- Man-in-the-Middle Potential: Attackers can impersonate legitimate devices and redirect traffic to malicious endpoints, leading to data interception or unauthorized access.

How Adversaries Use Insecure Hostname Queries

Attackers leverage insecure hostname queries in various ways to compromise networks:

- Responder Attacks: In this common method, attackers use tools like Responder to poison LLMNR or mDNS queries. When a device broadcasts a request for a hostname, the tool tricks the device into believing that the attacker's system is the requested resource. This enables attackers to capture credentials or reroute traffic.

- Credential Theft: By spoofing legitimate responses, attackers can trick users into sending their login details, which are then harvested for later use in more advanced attacks.

- Network Mapping and Reconnaissance: Adversaries can use the responses from LLMNR and mDNS to gather information about the devices and services available on the network, building a blueprint of the network structure to identify potential targets.

- Downgrade Attacks: Attackers may force a client to use an insecure hostname resolution method by disrupting standard DNS services, creating an opportunity to intercept communications.

How to Block Insecure Hostname Queries

Blocking or mitigating insecure hostname queries helps strengthen network security. Here’s how to do it:

A. On Windows Systems

- Disable LLMNR:

- Use Group Policy to disable LLMNR across the domain:

- Open Group Policy Management and create or edit an existing GPO.

- Navigate to Computer Configuration > Administrative Templates > Network > DNS Client.

- Set Turn off multicast name resolution to Enabled.

- Use Group Policy to disable LLMNR across the domain:

- Disable mDNS:

- There is no direct Group Policy for mDNS, but you can use PowerShell or registry tweaks to disable specific services that utilize mDNS.

B. On Linux Systems

-

Disable LLMNR:

- For systems using systemd-resolved, add or edit the configuration in

/etc/systemd/resolved.conf:[Resolve] LLMNR=no MulticastDNS=no - Restart the service with

sudo systemctl restart systemd-resolved.

- For systems using systemd-resolved, add or edit the configuration in

-

Disable mDNS:

- If avahi-daemon is installed (commonly used for mDNS services), stop and disable it:

sudo systemctl stop avahi-daemon sudo systemctl disable avahi-daemon

- If avahi-daemon is installed (commonly used for mDNS services), stop and disable it:

C. Network-Level Mitigation

- Network Segmentation: Use VLANs or network segmentation to isolate groups of devices that do not need to communicate via LLMNR or mDNS.

- Firewall Rules: Block multicast traffic related to LLMNR and mDNS at the network perimeter. Specifically:

- Block UDP traffic on port 5353 (mDNS) and port 5355 (LLMNR).

D. Leverage Ridgeback for Detection and Prevention

- Monitor Network Traffic: Ridgeback analyzes network metadata to identify patterns that indicate LLMNR or mDNS queries. This helps network administrators spot unusual or potentially malicious activity.

- Alerting and Reporting: Ridgeback can be configured to alert administrators when insecure hostname queries are detected, allowing for swift action.

- Policy Enforcement: Use Ridgeback's insights to enforce stricter network policies and reduce reliance on insecure protocols, enhancing your overall security posture.

Conclusion

Identifying and eliminating insecure hostname queries is a crucial step in protecting your network from credential theft, unauthorized access, and other malicious activities. By disabling LLMNR and mDNS, employing network-level controls, and using tools like Ridgeback to monitor traffic, you can significantly reduce the attack surface and improve the resilience of your network infrastructure.

Identify and Eliminate Reconnaissance Threats

Reconnaissance threats are among the earliest stages of cyber-attacks, where adversaries attempt to map out network architecture and identify potential targets. Understanding and addressing these threats is essential for maintaining network security and resilience.

What Is a Recon Threat?

Definition: A reconnaissance (recon) threat refers to any activity or attempt to gather information about endpoints or network structure for the purpose of identifying vulnerabilities. This activity can be performed by both friendly and potentially harmful actors, ranging from legitimate network devices to adversaries with malicious intent.

What Is Reconnaissance?

Reconnaissance is the process of scanning or probing a network to collect information about active devices, their services, open ports, IP addresses, and network topology. It is a preliminary phase in which data is collected to understand the structure and weaknesses of a network.

Types of Reconnaissance:

- Passive Reconnaissance: Involves monitoring network traffic or gathering information without actively engaging with the network (e.g., sniffing network traffic).

- Active Reconnaissance: Entails direct interaction with network components, such as ping sweeps, port scanning, or service enumeration.

Why Do Friendly Devices Conduct Reconnaissance?

Friendly reconnaissance refers to legitimate network devices performing scans or queries for beneficial reasons, such as:

- Network Discovery: Devices such as routers, printers, or administrative systems often perform network discovery to identify available resources or validate connectivity.

- Service Location: Systems might need to locate services such as DHCP servers or network printers.

- Health Checks: Monitoring tools and software agents may scan endpoints to ensure devices are functioning properly and meet compliance standards.

Example: An IT management system regularly pings or scans devices to verify availability and uptime, facilitating maintenance and troubleshooting.

Why Do Frenemy Devices Conduct Reconnaissance?

Frenemy devices are devices that aren't inherently malicious but may conduct reconnaissance in ways that can create vulnerabilities or raise security concerns. Examples include:

- Smart TVs and IoT Devices: These devices often conduct discovery to connect with other smart devices or update their network maps. While their intentions may be benign, poorly secured devices can be manipulated or compromised to perform unwanted network scanning.

- Printers and VoIP Systems: Such devices might broadcast queries to identify connected endpoints, which could lead to unintentional exposure of network details if improperly configured.

Why It Matters: Although these devices may not be acting with malicious intent, their network behavior can open pathways for exploitation if attackers gain control over them.

Why Do Adversaries Conduct Reconnaissance?

Adversaries conduct reconnaissance for several strategic reasons:

- Identifying Vulnerabilities: Attackers use recon activities to identify unpatched systems, open ports, and vulnerable services that can be exploited.

- Mapping Network Topology: Understanding the layout of the network helps attackers pinpoint high-value targets and plan subsequent attack phases.

- Credential Harvesting: Reconnaissance can reveal weaknesses in user authentication processes or reveal unprotected credential exchanges.

Example: An attacker might use tools such as Nmap to scan for active devices and open ports, providing the initial groundwork for an exploitation attempt.

How to Accommodate Friendly Reconnaissance

Accommodating friendly reconnaissance ensures that legitimate network scans can continue to support business needs without exposing the network to undue risk:

- Device Whitelisting: Configure Ridgeback or network monitoring tools to recognize and allow expected queries from trusted devices.

- Scheduled Scans: Use scheduled or periodic scans that are monitored and approved to reduce noise and avoid false positives.

- Network Segmentation: Isolate devices that conduct regular discovery in controlled subnets to limit their scope and exposure.

Best Practice: Use access controls to ensure that only authenticated and approved devices can perform network queries.

How to Block Unfriendly Reconnaissance

Blocking unfriendly reconnaissance is critical to preventing attackers from gathering intelligence on your network:

A. Use Network Security Tools

- Intrusion Detection and Prevention Systems (IDPS): Deploy IDPS tools that can identify and block scanning activity, such as port scans or unusual bursts of ICMP traffic.

- Firewalls: Configure firewall rules to limit unnecessary traffic and prevent unauthorized devices from probing your network.

- Ridgeback: Leverage Ridgeback’s capabilities to monitor network traffic metadata for signs of unauthorized enumeration attempts and alert administrators in real-time.

B. Implement Network Access Controls

- Restrict Network Access: Limit access to sensitive parts of the network based on the principle of least privilege.

- MAC Address Filtering: Use MAC address filtering to restrict which devices can communicate on the network. There are advanced methods to use Ridgeback for network access control (NAC).

- Zero Trust Architecture: Adopt a zero-trust approach that authenticates and authorizes every device and connection request. There are advanced methods to use Ridgeback to implement zero trust policies appropriate for your network.

C. Employ Network Obfuscation Techniques

- Ridgeback: Use Ridgeback phantoms to overwhelm and entangle unauthorize reconnaissance processes.

- Honeypots and Decoy Systems: Deploy honeypots that mimic legitimate network assets to detect and divert malicious reconnaissance.

- Network Address Translation (NAT): Use NAT to obscure internal IP addresses and make endpoint enumeration more challenging for potential attackers.

Conclusion

Reconnaissance threats pose a significant risk to network security, serving as the precursor to more advanced attacks. While legitimate devices may perform necessary scans and queries, distinguishing between friendly, frenemy, and adversarial reconnaissance is essential for maintaining a secure network. By accommodating beneficial scans, restricting unwanted probing, and employing advanced monitoring tools like Ridgeback, you can build a network that is both functional and resilient against recon threats.

Identify and Eliminate Active Threats

Active threats can signal an immediate and ongoing attempt to compromise a network. They often involve attempts to initiate communication with IP addresses or ports that are not in use, a behavior that can indicate probing, misconfiguration, or even the early stages of an attack. This chapter explores active threats in detail, their implications, and effective strategies for investigation and mitigation.

What Is an Active Threat?

Definition: An active threat refers to any attempt to initiate a TCP connection to an IP address or port that is currently not in use. These connection attempts can signal probing activity or an effort to exploit network vulnerabilities. While not all active threats are inherently malicious, they should be scrutinized to ensure network integrity.

Examples of Active Threats:

- Unauthorized port scanning.

- Repeated attempts to connect to unused IP addresses.

- Attempts to communicate with closed or restricted services.

How Do Adversaries Exploit Active Threats?

Exploitation of active threats typically occurs in the early stages of an attack. Adversaries use these methods to:

- Map Network Vulnerabilities: Attackers attempt connections to various IPs and ports to identify potential entry points or unpatched services.

- Test for Misconfigurations: Probes help attackers find weaknesses, such as forgotten or improperly secured ports.

- Launch Exploitation Campaigns: Once an attacker finds an open, unused, or misconfigured endpoint, they may deploy payloads to compromise the target.

Example: An attacker might use automated tools such as Nmap or Masscan to scan a range of IPs and ports for services that can be exploited.

How Would Friendly Devices Become an Active Threat?

Friendly devices can sometimes behave like active threats due to misconfigurations or legitimate tasks:

- Misconfigured Services: Devices or applications that are improperly set up may attempt to reach unused or non-existent IP addresses or ports.

- Scheduled Scanning: Network security tools and vulnerability scanners might perform automated scans that trigger alerts as potential threats.

- Outdated Software: Legacy systems or old software versions may have configurations that cause them to behave unpredictably, attempting unnecessary connections.

Example: An internal monitoring tool configured with incorrect IP ranges might attempt to connect repeatedly to unused endpoints, appearing as an active threat to network monitoring systems.

How to Investigate an Active Threat

Proper investigation of active threats is essential to differentiate between benign activities and real threats:

- Log Analysis: Examine firewall and network logs to trace the origin of the suspicious connection attempts.

- Network Traffic Monitoring: Use tools such as Ridgeback to analyze the metadata of connection attempts and identify patterns that indicate malicious intent.

- Device Identification: Identify the source device or system initiating the connection to understand whether it’s a friendly device, a misconfiguration, or a potential intruder.

Best Practice: Ensure that threat investigation procedures are documented and that incident response teams are trained to recognize common signatures of active threats.

Repairing Misconfigurations

If an active threat is found to be caused by a legitimate device or system, take the following steps:

- Reconfigure Systems: Correct any network misconfigurations, such as incorrect IP ranges in scanning tools or services reaching out to obsolete endpoints.

- Patch and Update: Ensure that all systems are updated with the latest security patches to mitigate the risk of unexpected behaviors.

- Remove Redundant Services: Disable or remove any legacy services that are no longer required but may still be attempting connections.

How to Accommodate a Friendly Vulnerability Scanner

Friendly vulnerability scanners play an essential role in proactively identifying weaknesses, but they must be managed to avoid appearing as active threats:

- Define IP Ranges and Rules: Clearly define the IP ranges that the scanner can target and establish rules that prevent scans from probing outside approved network boundaries.

- Whitelist Devices: Configure network monitoring tools, such as Ridgeback, to recognize and accommodate scans from approved scanners without flagging them as threats.

- Schedule Scans: Conduct vulnerability scans during predefined maintenance windows to minimize the impact on network traffic and avoid false alarms.

How to Eliminate Active Threats

To effectively eliminate active threats from your network, consider the following measures:

A. Use Network Monitoring Tools

- Ridgeback: Employ Ridgeback to continuously monitor for suspicious connection attempts to unused IPs and ports. Its metadata analysis can detect and alert on these activities in real time.

- Intrusion Detection Systems (IDS): Deploy IDS tools to identify active threat patterns and raise alerts for deeper investigation.

B. Implement Network Access Controls

- Firewall Configurations: Strengthen firewall rules to block unauthorized connection attempts and log incidents for analysis.

- Access Lists: Use access control lists (ACLs) to restrict which devices or networks are allowed to communicate with specific parts of your network.

- Segmentation: Segment your network to limit the spread of any unauthorized connection attempts and contain potential threats.

C. Strengthen Device and Application Security

- Authentication and Authorization: Ensure all devices and applications authenticate before attempting connections.

- Regular Updates and Patching: Keep devices and software up to date to prevent vulnerabilities that may lead to active threat activity.

- Network Scanning: Use internal scanning tools to proactively identify open or unused ports and services that need to be closed or secured.

Conclusion

Identifying and eliminating active threats is essential to protecting your network from potential exploitation. By understanding the sources and motivations behind these connection attempts, whether friendly or malicious, network administrators can take informed action. Through diligent monitoring, timely investigation, and robust configuration, you can mitigate active threats and fortify your network’s security posture.

Identify and Eliminate Unapproved Services

Unapproved or unauthorized services running on a network can create significant security risks. This chapter explains what services are, how they can increase risk, how to audit services in use, and how to shut down those that are unapproved. It also includes guidance on using source ports to trace the initiating process behind a service.

What Are Services?

Definition: Services refer to background processes or programs that run continuously to provide various functionalities on a system or network. These services are essential for enabling applications and ensuring smooth operations within an organization’s network.

Examples of Common Services:

- Web Services (HTTP/HTTPS): Allow web applications to run and communicate.

- File Transfer Services (FTP/SFTP): Facilitate the transfer of files between systems.

- Database Services (SQL Server, MySQL): Manage data storage and retrieval for applications.

- Remote Access Services (RDP, SSH): Provide remote access capabilities for system administration.

How Do Services Increase Risk?

Unapproved or poorly managed services can increase the risk of exploitation:

- Increased Attack Surface: Every active service adds a potential entry point into the network. Unnecessary or insecure services enlarge the network’s overall attack surface.

- Outdated and Unpatched Services: Unmonitored services can become outdated and vulnerable to exploits.

- Misconfigurations: Improper configurations can expose data or grant unauthorized access.

- Hidden Services: Undocumented services can provide covert entry points for attackers to maintain persistence within a network.

Real-World Example: A forgotten test web server running on a non-standard port with default credentials could be exploited for administrative access.

How to Audit the Services in Use

Auditing services helps identify which processes are running and whether they are approved:

Steps for Auditing Services:

-

Inventory Services: Use tools like Ridgeback to identify services running across your network.

-

Categorize and Evaluate: Classify services as essential, approved but non-essential, or unapproved.

-

Check Versions and Updates: Cross-check versions of running services with known vulnerabilities and patch requirements.

-

Trace Initiating Processes by Source Port:

-

On Windows:

- Use

netstat -anoto display active connections along with their source ports and associated process IDs (PIDs). - Use

tasklist /fi "PID eq <PID>"to identify the process by its PID. - For more detailed insight, use PowerShell:

Match theGet-NetTCPConnection | Where-Object { $_.State -eq 'Listen' } | Select-Object -Property LocalPort, OwningProcessOwningProcesswith thePIDintasklist.

- Use

-

On Linux:

- Use

ss -tulnpornetstat -tulnpto display listening ports, protocols, and associated PIDs. - Use

ps -p <PID> -o comm,argsto get details about the process and its command-line arguments. - Tools like

lsof -i :<port_number>can also be used to trace the process using a specific port.

- Use

-

Tools to Use:

- Ridgeback: Provides real-time network insights and helps identify services.

- Network Scanners (e.g., Nmap): Detect open ports and the services behind them.

- Local System Tools:

tasklist,ss, andlsoffor tracing PIDs and processes.

How to Shut Down Unapproved Services

Once unapproved services are identified, it’s important to shut them down securely:

Steps to Shut Down Unapproved Services:

- Confirm and Document: Verify the nature of the unapproved service and document its details (name, machine, and process ID).

- Coordinate with Stakeholders: Notify relevant teams before shutting down services that may impact other applications.

- Disable the Service:

- On Windows: Open

services.msc, locate the service, stop it, and set its Startup Type to Disabled. - On Linux: Use

systemctl stop <service_name>andsystemctl disable <service_name>to stop and prevent the service from starting at boot.

- On Windows: Open

- Kill Processes by PID:

- On Windows: Use

taskkill /PID <PID> /Fto forcefully terminate a process. - On Linux: Use

kill <PID>orkill -9 <PID>for forceful termination.

- On Windows: Use

- Remove or Secure: Uninstall unapproved services or reconfigure necessary ones with stronger security measures.

- Monitor for Recurrence: Implement continuous monitoring to ensure unapproved services do not reappear.

Preventative Measures:

- Network Policies: Establish clear policies outlining approved services and conditions for running them.

- Change Management: Implement a change management process to ensure services are reviewed before deployment.

- Access Controls: Restrict who can install or start services on key systems.

Conclusion

Regularly auditing and eliminating unapproved services is essential for reducing the network’s attack surface and enhancing security. Leveraging tools to trace initiating processes by source port can provide additional insight into the services running in your network. With diligent monitoring, informed action, and comprehensive policies, you can safeguard your network from unauthorized services.

Detect and Correct Leaky Segments

Network segmentation is a foundational practice in cybersecurity, designed to compartmentalize a network into manageable sections for improved security and performance. However, even well-segmented networks can experience unintended data leakage between segments, posing potential security and compliance risks. This chapter outlines how to detect and correct leaky segments in a network.

What Is a Network Segment?

Definition: A network segment is a portion of a network that is isolated or partitioned from other segments. Each segment functions as its own subnetwork with controlled communication pathways. Segmentation helps control the flow of traffic between different parts of a network and ensures that devices and data within one segment do not freely interact with another unless explicitly permitted.

Types of Network Segments:

- Physical Segments: Created using separate switches, routers, or dedicated cabling.

- Virtual Segments (VLANs): Logical segments configured within a single physical network infrastructure.

- Application Segments: Segmented based on applications or data flow rather than physical boundaries, often used in cloud environments.

Why Is Segmentation Important?

Key Benefits:

- Improved Security: Limits the spread of malware or unauthorized access by containing breaches within a single segment.

- Performance Optimization: Reduces traffic loads by controlling the flow between segments, leading to more efficient network operation.

- Regulatory Compliance: Helps organizations meet industry regulations by isolating sensitive data or systems from less secure segments.

- Enhanced Monitoring: Simplifies network monitoring by allowing focused observation of specific segments.

Example: A well-segmented network ensures that a breach in a guest Wi-Fi network does not grant an attacker access to internal business systems or sensitive data.

How to Detect Data Leakage Between Segments

Detecting data leakage between network segments is essential for maintaining network integrity and preventing unauthorized access to sensitive data.

Methods to Detect Leakage:

- Monitor Traffic Patterns:

- Use tools like Ridgeback to monitor network traffic and detect unusual data flows between segments. Ridgeback's metadata analysis helps pinpoint data exchanges that shouldn't occur, such as confidential data moving from a secure segment to a less secure one.

- Network Flow Analysis:

- Utilize network flow analysis tools (e.g., NetFlow, sFlow) to observe traffic patterns and identify unexpected data transfers between segments.

- Firewall and IDS/IPS Logs:

- Review logs from firewalls and intrusion detection/prevention systems to identify traffic that bypasses set policies or segments.

- Access Control Review:

- Regularly review access control lists (ACLs) to ensure that only authorized communications are allowed between segments.

- Packet Capture and Analysis:

- Use packet capture tools like Wireshark for a deeper investigation if data leakage is suspected. Analyze packet headers to identify traffic crossing boundaries without appropriate permissions.

Signs of Data Leakage:

- Unexpected Data Transfers: Traffic between a secure and less secure segment that isn't accounted for in network policy.

- Abnormal Bandwidth Usage: Unusual spikes in data flow between segments.

- Unauthorized Protocols: The use of protocols that shouldn't be allowed between segments, such as FTP traffic between a restricted segment and a public-facing segment.

How to Correct Segment Leakage

Once a leaky segment has been identified, immediate action is needed to correct the issue and prevent potential data breaches:

Steps to Correct Segment Leakage:

- Identify the Source and Destination:

- Determine which systems are involved in the data transfer and trace the pathways that allowed the leakage to occur.

- Adjust Network Policies:

- Modify ACLs and firewall rules to restrict unauthorized traffic between segments. Ensure that only required communication pathways are open.

- Enhance Segmentation Boundaries:

- Strengthen segmentation by implementing more granular controls such as microsegmentation, which limits communication even within the same broader segment.

- Patch and Update Systems:

- Ensure that all devices, firewalls, and software involved in segment control are up to date to close any vulnerabilities that may be contributing to leakage.

- Implement Zero Trust Principles:

- Adopt a zero-trust approach to further limit segment interactions. This requires continuous verification for devices and users attempting to access resources across segments.

- Use Encryption and VPNs:

- Encrypt traffic between segments to protect data integrity and confidentiality if data flow is necessary between segments with varying security levels.

- Continuous Monitoring:

- Establish continuous monitoring practices using tools like Ridgeback to ensure that corrections have been effective and to catch any new instances of leakage early.

Example Scenario: An internal segment used for HR services is inadvertently connected to a guest Wi-Fi network. Corrective action involves tightening firewall rules, verifying that VLANs are properly configured, and ensuring the guest network cannot route traffic to the HR segment.

Best Practices for Maintaining Segment Integrity

- Routine Audits: Regularly audit network segmentation policies and configurations to ensure they align with business and security requirements.

- Training and Awareness: Train IT and network staff on segmentation policies and the importance of keeping these configurations secure.

- Automated Alerts: Set up automated alerts for traffic anomalies that indicate potential segment leakage, allowing for a rapid response.

Conclusion

Detecting and correcting leaky network segments is vital to maintaining network security and ensuring that segmentation serves its purpose effectively. With tools like Ridgeback for continuous monitoring and best practices in place for policy management, organizations can prevent unauthorized data flows, protect sensitive information, and maintain a secure and compliant network infrastructure.

Identify Unauthorized Devices

Knowing what’s in your network is essential for effective management and security. Unauthorized devices can introduce significant risks, including data breaches and network vulnerabilities. This chapter discusses the importance of asset management and how Ridgeback can be used to audit IT assets effectively.

What's in Your Network?

Networks today can host a wide array of devices, from workstations and servers to IoT devices, mobile devices, and rogue hardware. Each device represents a potential entry point or vulnerability. Unauthorized or unknown devices can bypass security measures, leading to data breaches, unauthorized access, and other security incidents.

The Importance of Proper Asset Management

Asset management is the practice of tracking and managing all devices connected to a network. This includes identifying what devices are present, ensuring they are approved for network use, and monitoring their behavior. Here’s why asset management is critical:

1. Security:

- Prevent Unauthorized Access: Unauthorized devices can serve as entry points for attackers, allowing them to bypass traditional network defenses.

- Mitigate Insider Threats: Employees or malicious insiders may introduce unauthorized devices to exfiltrate data or disrupt operations.

- Reduce Attack Surface: By identifying and removing unauthorized devices, you reduce the number of potential vulnerabilities that attackers can exploit.

2. Compliance:

- Regulatory Requirements: Many regulatory standards, such as GDPR, HIPAA, and PCI DSS, require organizations to maintain an inventory of all connected devices to ensure data protection.

- Audit Readiness: Keeping a well-documented record of all authorized devices helps demonstrate compliance during security audits.

3. Performance and Network Efficiency:

- Avoid Network Congestion: Unauthorized devices can consume bandwidth and resources, leading to performance issues.

- Optimize Resource Allocation: Understanding what devices are on the network allows for better allocation of resources and network capacity planning.

Example: A company network might inadvertently host devices like rogue wireless access points or forgotten IoT devices that an attacker could exploit to gain unauthorized access to internal resources.

Using Ridgeback to Audit IT Assets

Ridgeback provides a robust solution for auditing IT assets and identifying unauthorized devices on a network. With Ridgeback’s metadata analysis and real-time monitoring capabilities, network administrators can gain clear visibility into all connected devices and take action to secure their network.

How Ridgeback Helps:

-

Device Discovery:

- Ridgeback scans network traffic metadata to identify all devices attempting to communicate within the network, including those that may not be recognized by traditional asset management systems.

- It can detect devices based on their network behavior, IP addresses, MAC addresses, and communication patterns.

-

Automated Alerts:

- Ridgeback can be configured to send automated alerts when an unauthorized or unknown device connects to the network. This helps ensure that administrators are aware of potential security risks in real-time.

- Alerts can include detailed information such as the device’s IP address, MAC address, and the nature of its network activity.

-

Comprehensive Reporting:

- Ridgeback’s reporting features provide detailed summaries of all detected devices, highlighting which ones are authorized and which are not.

- Reports can be used to support compliance efforts by documenting the network’s asset inventory and highlighting steps taken to address unauthorized devices.

-

Behavior Analysis:

- Ridgeback doesn’t just identify devices; it also monitors their activity to detect anomalies that may indicate a security threat. For example, if an unauthorized device is trying to scan the network or access restricted areas, Ridgeback can flag this behavior for further investigation.

Steps to Audit IT Assets with Ridgeback:

- Initiate a Network Scan: Use Ridgeback to initiate a comprehensive scan of the network to identify connected devices.

- Review Alerts and Reports: Check automated alerts and detailed reports to spot any unauthorized or unknown devices.

- Verify Devices: Cross-reference detected devices with your authorized device list to confirm which are approved.

- Take Action:

- Remove Unauthorized Devices: Isolate and remove any devices that are not authorized to be on the network.

- Investigate Anomalies: Investigate any devices flagged for suspicious behavior to determine if they represent a legitimate threat.

- Update Asset Inventory: Ensure that your asset management database is updated with any new authorized devices and changes to existing ones.

Best Practices for Managing Unauthorized Devices

- Implement Network Access Control (NAC): Use NAC solutions alongside Ridgeback to enforce policies that prevent unauthorized devices from connecting to the network.

- Regularly Update Asset Inventories: Keep an up-to-date inventory of all authorized devices to make it easier to spot unauthorized ones.

- Segment the Network: Use segmentation to limit the impact of unauthorized devices that do manage to connect, containing them to isolated parts of the network.

- Continuous Monitoring: Deploy continuous monitoring practices using Ridgeback to maintain vigilance and quickly identify new or unauthorized devices as they appear.

Conclusion

Proper asset management is essential for maintaining a secure, compliant, and efficient network. With Ridgeback’s powerful device discovery and monitoring capabilities, organizations can gain better visibility into their network, identify unauthorized devices, and take swift action to mitigate risks. Implementing strong policies and using Ridgeback to support ongoing audits will help ensure that your network remains secure and well-regulated.

Using Reports and Analytics

Overview of Basic Reports

Basic reports provide a snapshot of key network activities and security events. These foundational reports help teams monitor ongoing network health and identify areas requiring attention.

- Threat Summary – An outline of identified threats within the network.

- Hostname Leakage Summary – A report on potential hostname exposures.

- Attack Surface and Matrix Summaries – Insights into network vulnerabilities and exposure.

- Endpoint Inventory – A detailed list of all network-connected devices.

- Risk Report – An assessment of the network’s overall risk profile.

Exporting Data to Spreadsheets

For deeper analysis and collaboration, data from reports can be exported as spreadsheets. Exporting data helps teams share findings effectively and conduct extended analyses that go beyond the standard reporting framework. Spreadsheet exports are ideal for:

- Custom Analyses – Conduct in-depth reviews or apply specialized metrics.

- Trend Tracking – Observe patterns over time and track improvements or risks.

- Integration – Use report data in other security management or analytics tools.

Threat Summary

The threat summary provides information on which endpoints have tried to communicate with unused addresses, or the dark space. The summary includes the hostname and IP address of each threat, the unused address the threat tried to contact, and the first and last time the threat was heard from.

Reporting on Hostname Leakage

Hostname leakage reports detect instances where internal hostname information may be visible to a malicious actor. This information can potentially expose sensitive network details, making it critical to identify and address any leaks. These reports enable administrators to quickly locate and remediate hostname exposure to minimize the risk of targeted attacks.

Reporting on the Attack Surface

The attack surface report identifies areas where the endpoints may be vulnerable, outlining:

- Vulnerable Endpoints – Devices that could be targeted by attackers.

- Exposed Services – Services accessible that could be exploited.

As a rule, you should turn off, disable, or block communications to any services that you do not need. The only services that should be exposed are services you understand and control yourself.

Endpoint Inventory

The endpoint inventory report provides a detailed list of all devices connected to the network. For each endpoint you can see what segment it is attached to (identified by Rcore), the hostname, the IP address, the MAC address, the OUI, and when the device was first and last heard from.

This inventory is crucial for asset tracking, verifying compliance, and identifying any unauthorized or high-risk devices. The endpoint inventory can be part of your IT property management procedures.

Ridgeback Risk Report

The Ridgeback Risk Report delivers a view of the network’s security posture. The report shows exposure, complexity, capacity, endpoint exposure distribution, endpoint complexity distribution, endpoint load, service load, and link load.

Exposure refers to the level of vulnerability to adverse events, such as hacking, equipment failures, and misconfigurations. Identifying and addressing hygiene concerns and misconfigurations can reduce the opportunities adversaries have to evade detection.

Complexity reflects the balance between efficiency and fragility. Increased complexity can raise the cost of maintenance and upgrades. By managing complexity, you can simplify the implementation of security measures, better isolate sensitive data, and more effectively contain potential breaches.

Capacity represents the overall scale of a network. It's crucial to monitor all endpoints and services in use to carefully manage and limit the connections and processes that could be susceptible to exploitation.

For the risk report to work, you need to enable both phantoms and the surface service.

Phantoms

Phantoms play a unique role in Ridgeback’s approach to network security, offering innovative ways to monitor, detect, and mitigate threats within the "dark space" of a network. This chapter explores what phantoms are, how they impact both devices and users, and why they are integral to proactive threat detection.

The Dark Space in Your Network

In every network, there exists a "dark space"—areas of unused IP addresses and ports. This dark space is not part of active network operations but can be revealing when interacted with. Any traffic directed toward this space is often unauthorized or unusual, serving as an early indicator of suspicious behavior. Phantoms occupy this dark space and are set up to appear as legitimate devices. By occupying and monitoring this otherwise-unused network territory, phantoms provide insights into potential threats that traditional security tools may miss.

What Are Phantoms and Why Are They Important?

Phantoms are virtual endpoints that appear to be real, network-connected devices but are designed solely to detect unauthorized activity. Phantoms do not perform any genuine network function; instead, they turn the dark space into a vast tarpit. When an entity—be it a device, user, or potential attacker—attempts to communicate with a phantom, it triggers alerts, indicating potential reconnaissance or probing activity. This activity may include scanning for open ports, searching for vulnerable devices, or attempting to map the network.

Phantoms are essential because they reveal threats in real-time without requiring an actual breach. By catching suspicious entities in the dark space, phantoms enable administrators to detect threats that may not yet have reached critical systems or sensitive data.

Recon Threats and Active Threats

Phantoms help detect two main types of threats:

-

Reconnaissance (Recon) Threats: These are attempts to gather information about the network’s layout, devices, and vulnerabilities. When attackers conduct network scans or port probes, phantoms register this activity as a recon threat, allowing administrators to monitor and respond before the attacker gains a foothold.

-

Active Threats: These occur when an endpoint initiates unauthorized activity, such as trying to exchange data with phantoms in the dark space. Active threats indicate potentially compromised devices attempting to communicate or exfiltrate data. By identifying these attempts early, phantoms enable swift responses to mitigate or contain the threat.

Why do we call these "threats?" We call them threats because they represent very clear and obvious paths through which an attacker can seize control of your network. Each threat is like an open door leading to the compromise of your network. Ignore the recon and active threats at your own risk.

As a bonus, cleaning up the recon and active threats makes for a much more hygenic and easy-to-manage network. Imagine having a network that hums along in an orderly fashion, making IT problems easier to troubleshoot and quicker to fix.

Running Without Phantoms

Operating a network without phantoms can leave critical blind spots. Without phantoms, administrators might miss out on early signs of attack, such as probing or scanning activity that would otherwise go undetected. In essence, phantoms act as a first line of defense, catching unauthorized behavior in low-traffic areas before it reaches critical infrastructure. Without this layer of detection, organizations may only become aware of threats after they have infiltrated deeper into the network, potentially leading to costly and disruptive incidents.

The Effects of Phantoms on Computers

Phantoms are passive from a device performance perspective. Since they exist in unused network spaces, they do not interfere with legitimate device communications or operations. Instead, they serve as sticky traps and leverage the network’s monitoring capabilities to detect and log interactions. For network devices, this means that phantoms offer a non-intrusive layer of security that strengthens overall network defense without impacting normal device performance.

The Effects of Phantoms on People

For network administrators and security teams, phantoms offer enhanced visibility into otherwise-hidden network activities, providing valuable insights into potential risks. Phantoms also reduce the cognitive load for security personnel by offering clear indicators of suspicious activity that can guide and prioritize response efforts.

For malicious actors like hackers and malware, phantoms gum up operations, seriously degrading an attacker's ability to execute the hacking techniques of reconnaissance and exploitation. From the attacker's perspective, engaging with phantoms feels like entering an unreliable network. In fact, many professional penetration testers (i.e., the red team) have reported that engaging Ridgeback makes it seem like their own network is having problems. The end result is that malicious actors become very noisy and easy to detect after they contact one or more Ridgeback phantoms.

For end users, the impact of phantoms is invisible but valuable. By detecting threats before they escalate, phantoms contribute to a secure network environment, ultimately reducing the likelihood of disruptive security incidents or breaches.

Summary

Phantoms are a crucial tool in Ridgeback’s security framework, utilizing the dark space in a network to detect recon and active threats before they become critical issues. By running a network with phantoms, organizations gain early insights into suspicious activity, minimizing risks while enhancing overall security posture. Phantoms provide a proactive, non-intrusive method for keeping networks safe, offering both administrators and users peace of mind that potential threats are being caught at the earliest possible stage.

Using Ridgeback to Enhance Visibility and Harden Communications Infrastructure

Modern communications infrastructure faces ongoing, sophisticated threats from adversaries who exploit weak network visibility and insecure configurations to infiltrate systems and exfiltrate critical data. Guidance (released December 2024) from the Cybersecurity and Infrastructure Security Agency (CISA) reinforces the need for robust visibility, strict configuration management, and proactive hardening of network devices. In particular, it urges organizations to:

- Strengthen visibility into network traffic and configuration changes,

- Monitor accounts and device logins for anomalies,

- Ensure patching and secure configurations,

- Limit management exposure,

- Segment networks effectively,

- Deploy strong authentication and encryption standards.

Ridgeback—a platform designed to provide real-time network visibility, detect anomalies, and enforce security policies—aligns closely with these recommended measures. By deploying Ridgeback strategically across communications infrastructure, organizations can gain the insights and controls necessary to implement CISA’s guidance effectively.

1. Strengthening Visibility into Network Activity and Configurations

CISA Guidance: The guidance emphasizes comprehensive monitoring, including scrutinizing configuration changes, tracking flows at ingress and egress points, centralizing logs, and enforcing rigorous change management.

How Ridgeback Helps:

Ridgeback continuously collects and analyzes network traffic metadata, providing a clear, near real-time view of who is talking to whom on the network. By deploying Ridgeback components (service containers and Rcores) at key network segments, organizations can:

-

Monitor Configurations in Context: Use Ridgeback’s historical event data in conjunction with external configuration management and inventory tools to identify when configuration changes correlate with suspicious traffic patterns.

-

Centralized Visibility: Ridgeback’s server provides a unified dashboard displaying authorized and unauthorized communications, endpoint inventories, and suspicious events. Organizations can correlate these insights with their change management systems and SIEM tools for holistic visibility.

-

Enforceable Policies: Ridgeback’s policy engine can trigger alerts or actions based on detected anomalies. For example, if Ridgeback detects management traffic from unexpected sources, administrators can receive alerts or Ridgeback can automatically log these events for further investigation.

2. Monitoring User and Service Accounts for Anomalies

CISA Guidance: Validate and prune inactive accounts, monitor user logins internally and externally, and establish strong authentication mechanisms.

How Ridgeback Helps:

While Ridgeback does not replace identity and access management systems, it adds a critical layer of visibility:

-

Correlating Network Events with Account Activities: Ridgeback’s metadata analysis reveals which endpoints communicate internally and externally. By integrating these insights with logs from authentication services or AAA servers, organizations can detect mismatches (e.g., an account that should only manage devices from a dedicated workstation is observed initiating other management traffic).

-

Detecting Suspicious Patterns: If an account normally accesses certain network segments, Ridgeback can highlight anomalies where that same account’s device attempts lateral movement or contacts previously unused addresses, helping organizations quickly flag potentially compromised accounts.

3. Limiting Management Exposure and Secure Configuration

CISA Guidance: Do not allow device management from the internet, use an out-of-band management network, and ensure no default passwords or insecure protocols remain. Implement network segmentation and deny unnecessary traffic.

How Ridgeback Helps:

Ridgeback can enforce network segmentation policies by detecting unauthorized communications:

-

Preventing Out-of-Policy Traffic: Ridgeback can identify any traffic crossing segment boundaries that should not be connected. If a device management session originates from outside the designated out-of-band management network, Ridgeback can flag or disrupt it.

-

Zero-Tolerance Alerts for Insecure Protocols: Ridgeback’s event data can help spot when legacy or insecure management protocols (e.g., Telnet, SNMPv1) appear. The platform’s policy engine can trigger alerts or initiate automated responses such as quarantining the offending endpoint until it is reconfigured securely.

-

Dynamic Enforcement: If Ridgeback’s policies detect an endpoint trying to use default or known weak credentials (identified by suspicious repeated attempts to contact phantom endpoints or misconfigured devices), administrators can be alerted to reset or remove those credentials.

4. Comprehensive Logging and Correlating with SIEM

CISA Guidance: Implement secure, centralized logging, analyze and correlate logs from multiple sources, and apply SIEM solutions for quicker incident identification.

How Ridgeback Helps:

Ridgeback’s database and surface mapping of events complement log-based approaches:

-

Event Fusion: While CISA suggests centralized logging, Ridgeback provides structured network event metadata. Export Ridgeback’s event data into your SIEM for correlation with firewall logs, IDS/IPS alerts, and system logs. This combined approach enables advanced analytics, making anomalies more evident.

-

Pinpointing Attack Paths: If a SIEM alert indicates suspicious activity, Ridgeback’s historical network event data can help trace the lateral movement path, identify which segments were probed, and reveal previously unseen reconnaissance attempts.

5. Baselines and Detecting Abnormal Behavior

CISA Guidance: Establish baseline behavior and alert on anomalies.

How Ridgeback Helps:

Ridgeback inherently supports building baselines by continuously collecting traffic metadata over time:

-

Normal vs. Anomalous Patterns: Ridgeback’s risk and analytics features can help define what ‘normal’ traffic looks like for each endpoint or segment. Deviations—such as a device suddenly reaching out to previously unused IP ranges—can immediately raise alerts.

-

Incident Response and Forensics: If an incident is detected, Ridgeback allows administrators to pivot through historical data to understand when abnormal behavior began, which devices were involved, and how threats spread.

6. Enforcing Strong Cryptography and Protocol Selection

CISA Guidance: Use modern encryption, authenticated protocols, and secure cryptographic algorithms. Disable weak protocols and services.

How Ridgeback Helps:

While protocol changes and cryptography configurations occur at the device and network service level, Ridgeback provides critical feedback loops:

-

Detect Non-Compliance Quickly: If insecure services reappear due to misconfiguration, Ridgeback instantly detects unauthorized attempts to communicate or contact unused IPs/phantoms. This prompts swift remediation before adversaries exploit them.

-

End-to-End Monitoring: After network hardening, Ridgeback verifies that only the intended services continue communicating. If a deprecated service is accidentally re-enabled, Ridgeback’s continuous visibility ensures it cannot remain undetected.

7. Using Ridgeback to Enforce Microsegmentation and Zero Trust

CISA Guidance: Segment networks rigorously, employ defense-in-depth, and restrict lateral movement opportunities.

How Ridgeback Helps:

Ridgeback can help realize microsegmentation and zero trust principles:

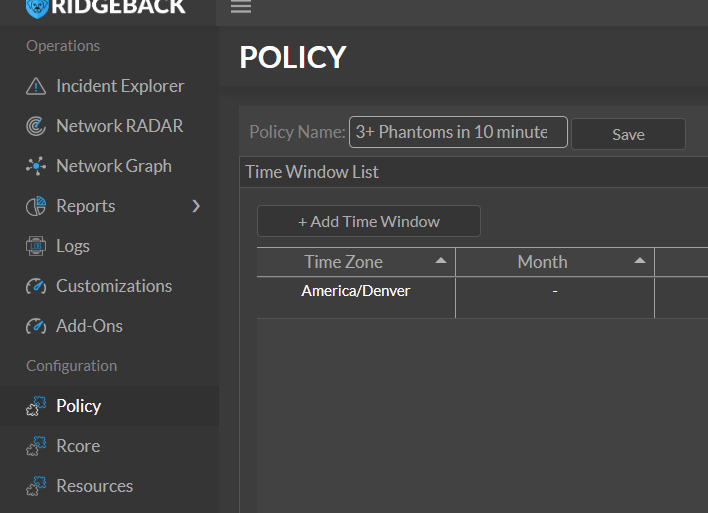

-